#Elasticsearch Consulting services

Explore tagged Tumblr posts

Text

What is Solr – Comparing Apache Solr vs. Elasticsearch

In the world of search engines and data retrieval systems, Apache Solr and Elasticsearch are two prominent contenders, each with its strengths and unique capabilities. These open-source, distributed search platforms play a crucial role in empowering organizations to harness the power of big data and deliver relevant search results efficiently. In this blog, we will delve into the fundamentals of Solr and Elasticsearch, highlighting their key features and comparing their functionalities. Whether you're a developer, data analyst, or IT professional, understanding the differences between Solr and Elasticsearch will help you make informed decisions to meet your specific search and data management needs.

Overview of Apache Solr

Apache Solr is a search platform built on top of the Apache Lucene library, known for its robust indexing and full-text search capabilities. It is written in Java and designed to handle large-scale search and data retrieval tasks. Solr follows a RESTful API approach, making it easy to integrate with different programming languages and frameworks. It offers a rich set of features, including faceted search, hit highlighting, spell checking, and geospatial search, making it a versatile solution for various use cases.

Overview of Elasticsearch

Elasticsearch, also based on Apache Lucene, is a distributed search engine that stands out for its real-time data indexing and analytics capabilities. It is known for its scalability and speed, making it an ideal choice for applications that require near-instantaneous search results. Elasticsearch provides a simple RESTful API, enabling developers to perform complex searches effortlessly. Moreover, it offers support for data visualization through its integration with Kibana, making it a popular choice for log analysis, application monitoring, and other data-driven use cases.

Comparing Solr and Elasticsearch

Data Handling and Indexing

Both Solr and Elasticsearch are proficient at handling large volumes of data and offer excellent indexing capabilities. Solr uses XML and JSON formats for data indexing, while Elasticsearch relies on JSON, which is generally considered more human-readable and easier to work with. Elasticsearch's dynamic mapping feature allows it to automatically infer data types during indexing, streamlining the process further.

Querying and Searching

Both platforms support complex search queries, but Elasticsearch is often regarded as more developer-friendly due to its clean and straightforward API. Elasticsearch's support for nested queries and aggregations simplifies the process of retrieving and analyzing data. On the other hand, Solr provides a range of query parsers, allowing developers to choose between traditional and advanced syntax options based on their preference and familiarity.

Scalability and Performance

Elasticsearch is designed with scalability in mind from the ground up, making it relatively easier to scale horizontally by adding more nodes to the cluster. It excels in real-time search and analytics scenarios, making it a top choice for applications with dynamic data streams. Solr, while also scalable, may require more effort for horizontal scaling compared to Elasticsearch.

Community and Ecosystem

Both Solr and Elasticsearch boast active and vibrant open-source communities. Solr has been around longer and, therefore, has a more extensive user base and established ecosystem. Elasticsearch, however, has gained significant momentum over the years, supported by the Elastic Stack, which includes Kibana for data visualization and Beats for data shipping.

Document-Based vs. Schema-Free

Solr follows a document-based approach, where data is organized into fields and requires a predefined schema. While this provides better control over data, it may become restrictive when dealing with dynamic or constantly evolving data structures. Elasticsearch, being schema-free, allows for more flexible data handling, making it more suitable for projects with varying data structures.

Conclusion

In summary, Apache Solr and Elasticsearch are both powerful search platforms, each excelling in specific scenarios. Solr's robustness and established ecosystem make it a reliable choice for traditional search applications, while Elasticsearch's real-time capabilities and seamless integration with the Elastic Stack are perfect for modern data-driven projects. Choosing between the two depends on your specific requirements, data complexity, and preferred development style. Regardless of your decision, both Solr and Elasticsearch can supercharge your search and analytics endeavors, bringing efficiency and relevance to your data retrieval processes.

Whether you opt for Solr, Elasticsearch, or a combination of both, the future of search and data exploration remains bright, with technology continually evolving to meet the needs of next-generation applications.

2 notes

·

View notes

Text

NextBrick's Elasticsearch Consulting: Elevate Your Search and Data Capabilities

In today's data-driven world, Elasticsearch is a critical tool for businesses of all sizes. This powerful open-source search and analytics engine can help you explore, analyze, and visualize your data in ways that were never before possible. However, with its vast capabilities and complex architecture, Elasticsearch can be daunting to implement and manage.

That's where NextBrick's Elasticsearch consulting services come in. Our team of experienced consultants has a deep understanding of Elasticsearch and its capabilities. We can help you with everything from designing and implementing efficient data models to optimizing search queries and troubleshooting performance bottlenecks.

Why Choose NextBrick for Elasticsearch Consulting?

Tailored solutions: We understand that every business is unique. That's why we take the time to understand your specific needs and goals before developing a tailored Elasticsearch solution.

Performance optimization: Our consultants are experts in Elasticsearch performance tuning. We can help you ensure that your Elasticsearch cluster is running smoothly and efficiently, even as your data volume and traffic grow.

Expert support: We offer a wide range of Elasticsearch support services, including 24/7 monitoring, issue resolution, and ongoing performance optimization.

How NextBrick's Elasticsearch Consulting Can Help You

Here are just a few ways that NextBrick's Elasticsearch consulting services can help you:

Improve search relevance: Our consultants can help you design and implement efficient data models and search queries that deliver more relevant results to your users.

Optimize performance: We can help you identify and address performance bottlenecks, ensuring that your Elasticsearch cluster is running smoothly and efficiently.

Troubleshoot issues: Our consultants can help you troubleshoot any Elasticsearch issues that you may encounter, including indexing errors, query performance problems, and security vulnerabilities.

Migrate from other search solutions: If you're currently using a different search solution, we can help you migrate to Elasticsearch with minimal disruption to your business.

Develop custom Elasticsearch applications: Our consultants can help you develop custom Elasticsearch applications to meet your specific needs, such as product search, real-time analytics, and log management.

Elevate Your Search and Data Capabilities with NextBrick

If you're serious about using Elasticsearch to elevate your search and data capabilities, then NextBrick is the partner you need. Our team of experienced consultants can help you with every aspect of Elasticsearch implementation and management, from design to deployment to support.

Contact us today to learn more about our Elasticsearch consulting services and how we can help you achieve your business goals.

0 notes

Text

Pluto AI: A New Internal AI Platform For Enterprise Growth

Pluto AI

Magyar Telekom, Deutsche Telekom's Hungarian business, launched Pluto AI, a cutting-edge internal AI platform, to capitalise on AI's revolutionary potential. This project is a key step towards the company's objective of incorporating AI into all business operations and empowering all employees to use AI's huge potential.

After realising that AI competence is no longer a luxury but a necessary for future success, Magyar Telekom faced comparable issues, such as staff with varying AI comprehension and a lack of readily available tools for testing and practical implementation. To address this, the company created a scalable system that could serve many use cases and adapt to changing AI demands, democratising AI knowledge and promoting innovation.

Pluto AI was founded to provide business teams with a simple prompting tool for safe and lawful generative AI deployment. Generative AI and its applications were taught to business teams. This strategy led to the company's adoption of generative AI, allowing the platform to quickly serve more use cases without the core platform staff having to comprehend every new application.

Pluto AI development

Google Cloud Consulting and Magyar Telekom's AI Team built Pluto AI. This relationship was essential to the platform's compliance with telecom sector security and compliance regulations and best practices.

Pluto AI's modular design lets teams swiftly integrate, change, and update AI models, tools, and architectural patterns. Its architecture allows the platform to serve many use cases and grow swiftly with Magyar Telekom's AI goal. Pluto AI includes Retrieval Augmented Generation (RAG), which combines LLMs with internal knowledge sources, including multimodal content, to provide grounded responses with evidence, API access to allow other parts of the organisation to integrate AI into their solutions, Large Language Models (LLMs) for natural language understanding and generation, and code generation and assistance to increase developer productivity.

The platform also lets users develop AI companions for specific business needs.

Pluto AI employs virtual machines and Compute Engine for scalability and reliability. It uses foundation models from the Model Garden on Vertex AI, including Anthropic's Claude 3.5 Sonnet and Google's Gemini, Imagen, and Veo. RAG procedures use Google Cloud ElasticSearch for knowledge bases. Other Google Cloud services like Cloud Logging, Pub/Sub, Storage, Firestore, and Looker help create production-ready apps.

The user interface and experience were prioritised during development. Pluto AI's user-friendly interface lets employees of any technical ability level use AI without a steep learning curve.

With hundreds of daily active users from various departments, the platform has high adoption rates. Its versatility and usability have earned the platform high praise from employees. Pluto AI has enabled knowledge management, software development, legal and compliance, and customer service chatbots.

Pluto AI's impact is quantified. The platform records tens of thousands of API requests and hundreds of thousands of unique users daily. A 15% decrease in coding errors and a 20% reduction in legal paper review time are expected.

Pluto AI vision and roadmap

Pluto AI is part of Magyar Telekom's long-term AI plan. Plans call for adding departments, business divisions, and markets to the platform. The company is also considering offering Pluto AI to other Deutsche Telekom markets.

A multilingual language selection, an enhanced UI for managing RAG solutions and tracking usage, and agent-based AI technologies for automating complex tasks are envisaged. Monitoring and optimising cloud resource utilisation and costs is another priority.

Pluto AI has made AI usable, approachable, and impactful at Magyar Telekom. Pluto AI sets a new standard for internal AI adoption by enabling experimentation and delivering business advantages.

#PlutoAI#generativeAI#googlecloudPlutoAI#DevelpoingPlutoAI#MagyarTelekom#PlutoAIRoadmap#technology#technews#technologynews#news#govindhtech

0 notes

Text

Retrospective of 2024 - Long Time Archive

I would like to reflect on what I built last year through the lense of a software architect. This is the first installement in a series of architecture articles.

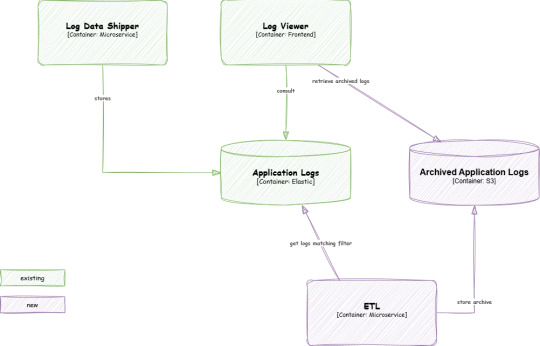

Last year, I had the opportunity to design and implement an archiving service to address GDPR requirements. In this article, I’ll share how we tackled this challenge, focusing on the architectural picture.

Introduction

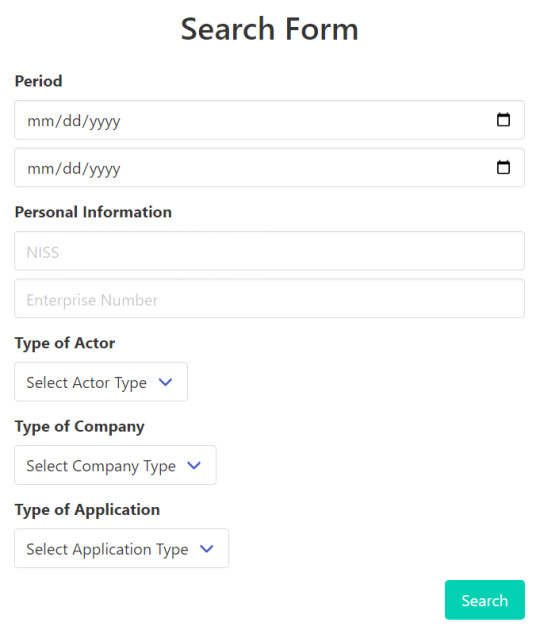

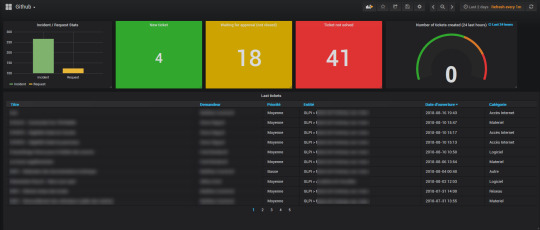

This use case revolves around the Log Data Shipper, a core system component responsible for aggregating logs from multiple applications. These logs are stored in Elasticsearch, where they can be accessed and analyzed through an Angular web application. Users of the application (DPOs) can search the logs according to certain criteria, here is an example of such a search form:

The system then retrieves logs from ES so that the user can sift through them or export them if necessary, basically do what DPOs do.

As the volume of data grows over time, storing it all in Elasticsearch becomes increasingly expensive. To manage these costs, we needed a solution to archive logs older than two years without compromising the ability to retrieve necessary information later. This is where our archiving service comes into play.

Archiving Solution

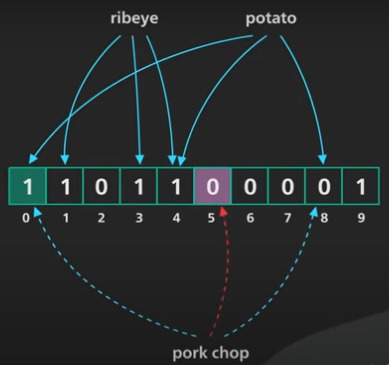

Our approach involved creating buckets of data for logs older than two years and storing them on an S3 instance. Since the cost of storing compressed data is very low and the retention is very high, this was a cost-effective choice. To make retrieval efficient, we incorporated bloom filters into the design.

Bloom Filters

A bloom filter is a space-efficient probabilistic data structure used to test whether an element is part of a set. While it can produce false positives, it’s guaranteed not to produce false negatives, which made it an ideal choice for our needs.

During the archiving process, the ETL processes batches of logs and extracts essential data. For each type of data, we calculate a corresponding bloom filter - each search criteria in the form -. Both the archives and their associated bloom filters (a metadata field) are stored in S3.

When a user needs to retrieve data, the system tests each bloom filter against the search criteria. If a match is found, the corresponding archive is downloaded from S3. Although bloom filters might indicate the presence of data that isn’t actually in the archive (a false positive), this trade-off was acceptable for our use case as we are re-loading the logs in an temporary ES index. Finally we sort out all unecessary logs and the web application can read the logs in the same way current logs are consulted. We call this process "hydration".

Conclusion

This project was particularly interesting because it tackled an important topic – GDPR compliance – while allowing us to think outside the box by applying lesser-known data structures like bloom filters. Opportunities to solve challenging problems like this don’t come often, making the experience all the more rewarding.

It was also a great learning opportunity as I challenged the existing architecture, which required an additional index database. With the help of my team, we proposed an alternative solution that was both elegant and cost-effective. This project reinforced the importance of collaborative problem-solving and showed how innovative thinking can lead to efficient solutions.

0 notes

Text

Custom AI Development Services - Grow Your Business Potential

AI Development Company

As a reputable Artificial Intelligence Development Company, Bizvertex provides creative AI Development Solutions for organizations using our experience in AI app development. Our expert AI developers provide customized solutions to meet the specific needs of various sectors, such as intelligent chatbots, predictive analytics, and machine learning algorithms. Our custom AI development services are intended to empower your organization and produce meaningful results as it embarks on its digital transformation path.

AI Development Services That We Offer

Our AI development services are known to unlock the potential of vast amounts of data for driving tangible business results. Being a well-established AI solution provider, we specialize in leveraging the power of AI to transform raw data into actionable insights, paving the way for operational efficiency and enhanced decision-making. Here are our reliably intelligent AI Services that we convert your vision into reality.

Generative AI

Smart AI Assistants and Chatbot

AI/ML Strategy Consulting

AI Chatbot Development

PoC and MVP Development

Recommendation Engines

AI Security

AI Design

AIOps

AI-as-a-Service

Automation Solutions

Predictive Modeling

Data Science Consulting

Unlock Strategic Growth for Your Business With Our AI Know-how

Machine Learning

We use machine learning methods to enable sophisticated data analysis and prediction capabilities. This enables us to create solutions such as recommendation engines and predictive maintenance tools.

Deep Learning

We use deep learning techniques to develop effective solutions for complex data analysis tasks like sentiment analysis and language translation.

Predictive Analytics

We use statistical algorithms and machine learning approaches to create solutions that predict future trends and behaviours, allowing organisations to make informed strategic decisions.

Natural Language Processing

Our NLP knowledge enables us to create sentiment analysis, language translation, and other systems that efficiently process and analyse human language data.

Data Science

Bizvertex's data science skills include data cleansing, analysis, and interpretation, resulting in significant insights that drive informed decision-making and corporate strategy.

Computer Vision

Our computer vision expertise enables the extraction, analysis, and comprehension of visual information from photos or videos, which powers a wide range of applications across industries.

Industries Where Our AI Development Services Excel

Healthcare

Banking and Finance

Restaurant

eCommerce

Supply Chain and Logistics

Insurance

Social Networking

Games and Sports

Travel

Aviation

Real Estate

Education

On-Demand

Entertainment

Government

Agriculture

Manufacturing

Automotive

AI Models We Have Expertise In

GPT-4o

Llama-3

PaLM-2

Claude

DALL.E 2

Whisper

Stable Diffusion

Phi-2

Google Gemini

Vicuna

Mistral

Bloom-560m

Custom Artificial Intelligence Solutions That We Offer

We specialise in designing innovative artificial intelligence (AI) solutions that are tailored to your specific business objectives. We provide the following solutions.

Personlization

Enhanced Security

Optimized Operations

Decision Support Systems

Product Development

Tech Stack That We Using For AI Development

Languages

Scala

Java

Golang

Python

C++

Mobility

Android

iOS

Cross Platform

Python

Windows

Frameworks

Node JS

Angular JS

Vue.JS

React JS

Cloud

AWS

Microsoft Azure

Google Cloud

Thing Worx

C++

SDK

Kotlin

Ionic

Xamarin

React Native

Hardware

Raspberry

Arduino

BeagleBone

OCR

Tesseract

TensorFlow

Copyfish

ABBYY Finereader

OCR.Space

Go

Data

Apache Hadoop

Apache Kafka

OpenTSDB

Elasticsearch

NLP

Wit.ai

Dialogflow

Amazon Lex

Luis

Watson Assistant

Why Choose Bizvertex for AI Development?

Bizvertex the leading AI Development Company that provides unique AI solutions to help businesses increase their performance and efficiency by automating business processes. We provide future-proof AI solutions and fine-tuned AI models that are tailored to your specific business objectives, allowing you to accelerate AI adoption while lowering ongoing tuning expenses.

As a leading AI solutions provider, our major objective is to fulfill our customers' business visions through cutting-edge AI services tailored to a variety of business specializations. Hire AI developers from Bizvertex, which provides turnkey AI solutions and better ideas for your business challenges.

#AI Development#AI Development Services#Custom AI Development Services#AI Development Company#AI Development Service Provider#AI Development Solutions

0 notes

Text

Unlock the Power of Open Source Technologies with HawkStack

In today’s fast-evolving technological landscape, Open Source solutions have become a driving force for innovation and scalability. At HawkStack, we specialize in empowering businesses by leveraging the full potential of Open Source technologies, offering cutting-edge solutions, consulting, training, and certification.

The Open Source Advantage

Open Source technologies provide flexibility, cost-efficiency, and community-driven development, making them essential tools for businesses looking to grow in a competitive environment. HawkStack's expertise spans across multiple domains, allowing you to adopt, implement, and scale your Open Source strategy seamlessly.

Our Expertise Across Key Open Source Technologies

Linux Distributions We support a wide range of Linux distributions, including Ubuntu and CentOS, offering reliable platforms for both server and desktop environments. Our team ensures smooth integration, security hardening, and optimal performance for your systems.

Containers & Orchestration With Docker and Kubernetes, HawkStack helps you adopt containerization and microservices architecture, enhancing application portability, scalability, and resilience. Kubernetes orchestrates your applications, providing automated deployment, scaling, and management.

Web Serving & Data Solutions Our deep expertise in web serving technologies like NGINX and scalable data solutions like Elasticsearch and MongoDB enables you to build robust, high-performing infrastructures. These platforms are key to creating fast, scalable web services and data-driven applications.

Automation with Ansible Automation is the backbone of efficient IT operations. HawkStack offers hands-on expertise with Ansible, a powerful tool for automating software provisioning, configuration management, and application deployment, reducing manual efforts and operational overhead.

Emerging Technologies We are at the forefront of emerging technologies like Apache Kafka, TensorFlow, and OpenStack. Whether you're building real-time streaming platforms with Kafka, deploying machine learning models with TensorFlow, or exploring cloud infrastructure with OpenStack, HawkStack has the know-how to guide your journey.

Why Choose HawkStack?

At HawkStack, our mission is to empower businesses with Open Source solutions that are secure, scalable, and future-proof. From consulting and implementation to training and certification, we ensure your teams are well-equipped to navigate and maximize the potential of these innovations.

Ready to harness the power of Open Source? Explore our full range of services and solutions by visiting HawkStack.

Empower your business today with HawkStack — your trusted partner in Open Source technologies!

0 notes

Text

Explore Elasticsearch and Why It’s Worth Using?

Elasticsearch is a powerful open-source search that allows you to store, Search, and analyse an immense volume of data quickly and it is built on top of Apache Lucene. It handles a large volume of data and provides as quick as flash search capabilities with near real-time results. It’s a database that can store, index, and access both structured and unstructured data.

It can store and index documents without the requirement for mentioned schemas since it takes a schema-less approach to document storage and indexing. This adaptability makes it appropriate for procedures involving regularly changing data structures or dealing with big, dynamic information.

Key Features Of Elasticsearch

Revert to a snapshot: It allows you to recover your data and gather the state from an earlier snapshot. It can be used to recover a system failure, migrate data to a different cluster, or roll back to a previous state.

Integration with Other Technologies: It integrates smoothly with various popular technologies and frameworks. It offers official clients and connectors for programming languages like Java, Python, .NET, and more. It also integrates well with data processing frameworks like Apache Spark and Hadoop, allowing stable interaction and data exchange between systems.

Aggregation Framework: It provides an in-depth aggregation framework that allows you to define a multitude of aggregations on your data. Aggregations work on groups of documents and can be nested to create complex analytical pipelines.

How Elasticsearch Fits Into The Search Engine Landscape

Elasticsearch is a professional and adaptable search and analytics engine that plays an essential role in the search engine surroundings.

Do you know why it is designed? It is designed to handle a wide range of use cases, including full-text search, structured search, geospatial search, and analytics. It also provides a flexible JSON-based query language which allows developers to create complex queries, apply filters and perform aggregations to extract meaningful insights from the indexed data.

Key Concepts And Terminology

Indices: Containers or logical namespaces that hold indexed data.

Documents: Basic units of information, represented as JSON objects.

Nodes: Instances of Elasticsearch that form a cluster.

Shards: Smaller units of an index that store and distribute data across nodes.

Replicas: Copies of index shards for redundancy and high availability.

Mapping: Defines the structure and characteristics of fields within an index.

Query: Request to retrieve specific data from indexed documents.

Query DSL: Elasticsearch’s domain-specific language for constructing queries.

Elastic Search’s Compatibility With Other Tools And Frameworks

Elasticsearch works well with a wide range of tools, frameworks, databases, and data sources, making it a versatile component of the data ecosystem. It works well with standard databases and data sources, allowing for fast data ingestion and retrieval. Connectors and plugins allow for database interaction with MySQL, PostgreSQL, MongoDB, and Apache Cassandra. Elasticsearch can now index and search data straight from these databases, giving new search capabilities on top of current data.

Elasticsearch monitoring and management are necessary for ensuring maximum performance and availability. Elasticsearch includes monitoring APIs and connects with tools such as Elasticsearch Watcher, Elastic APM, and Grafana. These tools provide for continuous monitoring of cluster health, resource utilisation, and query performance. Elasticsearch’s APIs and user interfaces also offer many administrative activities such as index management, cluster management, and security setup.

Elasticsearch, whether as a standalone search engine or as part of the ELK stack, delivers powerful search, analytics, and monitoring capabilities to unlock insights from varied data sources.

Tools And Techniques For Monitoring Cluster Health And Performance:

Elasticsearch has built-in monitoring APIs.

Monitoring technologies such as Elasticsearch Watcher, Elastic APM, and Grafana is integrated.

Insights into cluster performance, resource utilisation, and query latency in real-time.

Common Management Tasks:

Scaling the cluster can increase performance by adding or removing nodes.

Using snapshot and restore features to implement backup and disaster recovery methods.

Upgrading Elasticsearch while guaranteeing compatibility with plugins and apps by following official upgrade guides.

Troubleshooting Common Issues And Error Handling:

Reviewing logs and error messages for diagnostics.

Analyzing cluster health and configuration.

Seeking support from the active Elasticsearch community and official channels.

Use Cases And Success Stories:

Popular applications include log analytics, e-commerce search, geospatial analysis, and real-time monitoring.

Companies like Netflix, GitHub, and Verizon have successfully utilized Elasticsearch Consulting for fast data retrieval, personalized recommendations, and efficient log analysis.

Industries And Domains Benefiting From Elasticsearch

Elasticsearch is an adaptable search and analytics engine that has applications in a wide range of businesses and topics. Elasticsearch is useful in the following industries and domains:

IoT and Log Analytics: Managing and extracting insights from massive volumes of machine-generated data.

Log Data Centralization

Real-time Data Ingestion and Analysis

Powerful Search and Query Capabilities

Anomaly Detection and Monitoring

Predictive Maintenance and Optimization

Government and Public Sector: Enhance data management, decision-making, and service delivery.

Open Data Portals

Citizen Services

Fraud Detection and Prevention

Compliance Monitoring

Crisis Management and Emergency Response

In short, elastic search is a powerful and feature-rich search and analytics engine that provides outstanding value to developers, data engineers, and companies. Elasticsearch is definitely worth thinking about if you’re creating a search engine, monitoring logs, or analysing data for business insights.

Originally published by: Explore Elasticsearch and Why It’s Worth Using?

#Elasticsearch Consulting#Elasticsearch Consulting services#Elasticsearch Framework#Hire Elasticsearch Consultant

0 notes

Text

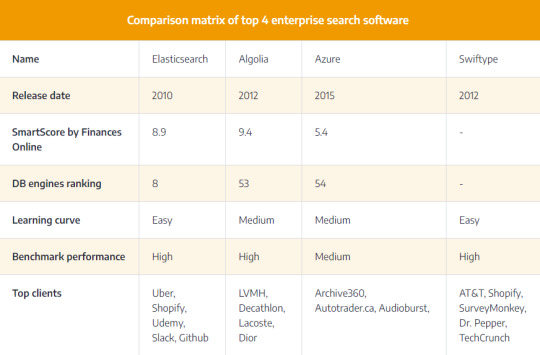

Top 4 Enterprise Search Software in 2021

Nowadays, we cannot imagine our life without Google. We use it daily to find solutions for a wide range of challenges from home issues to business problems.

The Internet Live Stats Tracker states that over 40,000 users Google data every second that transforms into enormous 1.2 trillion search requests a year. It looks spectacular, right?

This statistics allows us to realize that millions of people are ready to consume gigabytes of data and the power of search engines only tends to grow. What’s more, data search becomes an integral attribute and tool for any business to find, analyze information, and improve scalability.

As Peter Morville, a consultant, influencer, and co-author of the best-selling book ‘Information Architecture for the World Wide Web’, once said:

What we find changes who we become.

The core reason for businesses to use data search is the generation of valuable insights that let companies step forward and adapt to constantly changing users’ demands.

Here comes enterprise search software that helps entrepreneurs efficiently seek for and analyze data in a matter of seconds. However, what is the best way to find the right and relevant technology solution for your specific business needs?

Today we want to guide you through the top 4 enterprise search software solutions covering their pros and cons to help you make the right decision for your business. Besides, we provide a comparison matrix of these tools to bring a high-level overview of them for your guidance.

Elasticsearch

Established: 8 February 2010

Elasticsearch pricing:

Standard - $16/month

Gold - $19/month

Platinum - $22/month

Enterprise – individual

What is Elasticsearch? So, it is one of the most popular open-source enterprise search software in the Big Data industry with embedded JSON REST API and built on Apache Lucene and Java.

Elasticsearch was released to establish multitenant-capable full-text search and analysis of large amount of data. The tool’s opportunities make Elasticsearch a perfect choice for both middle and enterprise-scale projects that need more resources.

What companies use Elasticsearch?

What are the key features of Elasticsearch?

Scalability and fault tolerance

Multitenancy

Operational stability

Schema-free

Why do we use Elasticsearch at Ascendix?

The key reason Ascendix development teams choose Elasticsearch is the high performance it provides. So, we can avoid 1-3 seconds for a search result to be generated and provide our clients with immediate data without solid delays.

You may ask the following question: “Why is Elasticsearch so fast?”

The answer lies in the essence of shards which are the set of inverted indices. Simply speaking, this enterprise search software generates multiple inverted indices tables each time after indexing which saves much time for both the program and end-users that get immediate results.

Algolia

Established: 2012

Algolia pricing:

Free – up to 10 units (1000 requests)

Standard - $1/unit/month

Gold - $1.5/unit/month

OEM - individual

Companies that use Algolia

Why use Algolia?

Site search

Voice search

Geo search

Mobile search

What are the key features of Algolia Search?

Search rules

InstantSearch

Personalization

A/B testing

Specific search API

Algolia AI

You can learn more details about each enterprise search software feature on their official website.

Azure Cognitive Search

Established: 2008

Azure Search service pricing:

Free – only 50 mb storage

Basic - $73.73/month

Standard S1- $245.28/month

Standard S2 - $981.12/month

Standard S3 – 1,962.24/month

Storage Optimized L1 - $2,802.47/month

Storage Optimized L2 – individual

What is Azure Cognitive Search?

It is a cloud search service by Microsoft with integrated AI features that allow to quickly identify and analyze relevant data of any scale. This way, Azure cognitive search allows you to build mobile and web applications with powerful enterprise search features.

Companies that use Azure Search

Key features of Azure full-text search

Powerful indexing

Data processing

Query & Relevancy Ranking

Swiftype

Established: January 2012

Swiftype pricing:

Swiftype App Search - $49/mo

Swiftype Site Search - $79/mo

Swiftype search is a cloud-based and customizable software-as-a-service platform that allows your business to create a powerful search experience and work with data efficiently. Established in 2012, the company has acquired $23 million and has been improving the product continuously.

What companies use Swiftype?

Core features of Swiftype

Powerful Search Algorithm

Content ingestion and syncing

Deep analytics

Real-time indexing

Enterprise Search Software Comparison Matrix

Each search tool mentioned above has its benefits and drawbacks in terms of specific aspects. In order to help you choose the best enterprise search software, let’s look at the comparison of Elasticsearch vs Algolia vs Azure vs Swiftype below.

Final words

So, we have discussed the most trending and powerful search solutions. It's worth noting that there is no omni-purpose software that fits any business needs.

For this reason, take a look at these tools, compare them, and make the right choice that will perfectly suit your company's demands.

For more information, read the full article 4 Leading Enterprise Search Software to Look For in 2021.

2 notes

·

View notes

Link

Why DO You Need Elasticsearch Consulting & Implementation Services

Elasticsearch is a distributed, open-source search engine based on the Lucene library. It is designed to provide fast and scalable full-text search, as well as complex analytics and visualization capabilities.

0 notes

Text

Solr vs. Elasticsearch: Making the Right Choice with Expert Consulting

Solr and Elasticsearch are two of the most popular open-source search engines. Both search engines are known for their performance, scalability, and flexibility. However, there are some key differences between the two search engines.

Solr

Solr is a distributed search engine that is built on top of Apache Lucene. Solr is known for its performance and scalability. Solr is a good choice for applications that need to handle large volumes of data or that need to be available 24/7.

Elasticsearch

Elasticsearch is a distributed search and analytics engine that is built on Apache Lucene. Elasticsearch is known for its scalability and ease of use. Elasticsearch is a good choice for applications that need to handle large volumes of data or that need to be able to perform complex analytics.

Which search engine is right for you?

The best search engine for you will depend on your specific needs and requirements. If you need a search engine that is known for its performance and scalability, then Solr is a good choice. If you need a search engine that is easy to use and that can perform complex analytics, then Elasticsearch is a good choice.

Expert consulting can help you to make the right choice

If you are not sure which search engine is right for you, then it is a good idea to consult with an expert. An expert can help you to assess your needs and requirements and to choose the right search engine for your application.

Here are some additional tips for choosing the right search engine:

Consider your needs and requirements. What do you need your search engine to do? How much data do you need to index? How many users do you expect to use your search engine? Evaluate the features of each search engine. Solr and Elasticsearch offer a variety of features, such as faceted search, geospatial search, and machine learning. Make sure to choose a search engine that has the features that you need. Consider your budget. Solr and Elasticsearch are both open-source search engines, but there are also commercial versions of both search engines available. Commercial versions of Solr and Elasticsearch offer additional features and support. Talk to experts. If you are not sure which search engine is right for you, talk to experts. Experts can help you to assess your needs and requirements and to choose the right search engine for your application.

Solr and Elasticsearch are both powerful search engines. The best search engine for you will depend on your specific needs and requirements. If you are not sure which search engine is right for you, then it is a good idea to consult with an expert. An expert can help you to make the right choice and to get the most out of your search engine.

0 notes

Text

AWS DevOps Consultant

Job Title: AWS DevOps Consultant Source: Monster Job Category: Sales & Marketing Jobs Company: Infosys LimitedExperience: 5 to 9location: ChennaiRef: 26420885Summary: Responsibilities : ” Strong working experience on AWS services – EKS, ECS, EC2, RDS, S3, Cloudwatch, Secrets Manager, Elasticsearch Service, Kinesis, VPC, Route 53, Direct Connect, etc. Strong working experience on AWS services –…

View On WordPress

0 notes

Text

What are the Factors to consider when Securing Big Data

Huge information is the new toy around—a mechanical ware that is driving turn of events, but on the other hand is a significant disputed matter between organizations, clients, and overseeing elements. Be that as it may, in spite of the name enormous information, it is regularly in the ownership of private companies, who have not gone to the proper lengths to get this information. At the point when such a lot of data are on the line, a break of this information can be amazingly impeding.

With nonstop outrages being broadcasted concerning helpless security assurances, best cyber security consultants, top cybersecurity companies, information security services it is much more significant for your information to be ensured. Think about these three things while getting huge information: your particular arrangements, what access you give out, and how to screen your information.

1. Designs

It was June of last year that the Exactis spill was uncovered. Exactis, a Floridian showcasing information representative, had a misconfigured Amazon ElasticSearch server that presented near 340 million records on both American grown-ups and organizations. This included staggeringly explicit subtleties like pets, sexual orientation of youngsters, and smoking propensities. This break has disabled Exactis; there is minimal possibility that Exactis will skip back from this occasion. Past the impact that this hole has had on the business, Exactis CEO, Steve Hardigree, has likewise been open with regards to the flood of requests, dangers, and consistent pressure this has had on his own life.

The base of this devastating hole lies in a misconfiguration and shows us exactly the way that designs can represent the deciding moment your business. At the point when you are arranging out your large information space, you want to twofold, and triple actually take a look at your designs.

Ways to actually look at your Configurations:

Security is a complex monster and your information is extraordinary, which thusly implies that your way to deal with security should be redone. This could mean involving security programming in a capricious way or using an outsider security organization.

Think about the easily overlooked details. Do you believe all of the programming interfacing with your information? If not, how might you make it a confided in asset?

Consider getting an outsider Network Security and Architecture Review of your current circumstance. This permits you to have an external assessment of precisely how secure your information is. In the event that conceivable, it is useful to get this audit yearly.

2. Access Granted

As you are settling on setups, you really want to consider who will be conceded admittance and to what.

Assuming the information is intended to remain totally inner, you really want to conclude what sorts of clients are permitted what authorizations. For instance, who is permitted to pull information? Might it be said that anyone is? In the event that it's anything but a piece of the day by day responsibility, under what conditions is it permitted? By who?

In the event that you will impart your information to outsiders, there is one more host of inquiries to consider. Do you permit them limitless admittance to your information? Who do you permit admittance to?

Ways to concede Internal and External Access:

Limit how much outer access you permit; if conceivable, don't permit it by any means. This will decrease your assault surface and your intrinsic danger.

Outside assets probably don't have to get to all that your inside assets can. Prohibitive gatherings are an incredible authoritative method for isolating who approaches what inside your current circumstance.

Not all inside assets are equivalent and accordingly ought not be given a similar access. You should assess how you give out access and archive your cycle of heightening and deescalating access.

As it has become clear with Facebook's permission of leaving information associations open even after bargains had been shut, it is likewise critical to ponder what happens when access has been denied. What are you going to set up to forestall access when it should presently don't be permitted?

View the entrance you award in a serious way so you don't wind up scrambling to make changes after an occurrence.

3. Checking and Alerting

For all that should be possible to your information, there ought to be a way for you to screen it. This isn't to imply that that you need to obsessively fuss over each part of your huge information. In any case, on the off chance that an occurrence were to happen, or all the more sensibly when an episode happens, you ought to have the option to build a picture of what was happening at the hour of the occasion. For this to be conceivable, you want a method for observing your information and get alarms on the episodes.

Methods for Monitoring and Alerting:

Foes don't keep ordinary business hours, so be certain you are checking your information at throughout the hours. One approach to handily accomplish day in and day out/365 observing is by re-appropriating this capacity to a Managed Security Services Provider (MSSP).

When setting up cautions, it very well may be trying to track down a harmony between "alert on each and every conceivable occasion" and "I just need to see significant alarms". Consider the possibility that an increase on those apparently innocuous alarms is the main clue to an insider danger. Also then again, assuming you are continually tense from alarms, you will effortlessly fall into ready exhaustion. A MSSP can go about as the channel among you and your cautions, just informing you after an alarm is explored and affirmed to be real.

At the point when you are in control of enormous information, there is a ton on the line to get. At the point when a break of this extent can annihilate your business, it's basic you think about these variables.

0 notes

Text

Grafana Metabase

If you’ve ever done a serious web app, you’ve certainly met with a requirement for its monitoring, or tracking various application and runtime metrics. Exploring recorded metrics lets you discover different patterns of app usage (e.g., low traffic during weekends and holidays), or, for example, visualize CPU, disk space and RAM usage, etc. As an example, if the RAM usage graph shows that the usage is constantly rising and returns to normal only after the application restart, there may be a memory leak. Certainly, there are many reasons for implementing application and runtime metrics for your applications.

There are several tools for application monitoring, e.g. Zabbix and others. Tools of this type focus mainly on runtime monitoring, i.e., CPU usage, available RAM, etc., but they are not very well suited for application monitoring and answering questions like how many users are currently logged in, what’s the distribution of server response times, etc.

When comparing Grafana and Metabase, you can also consider the following products. Prometheus - An open-source systems monitoring and alerting toolkit. Tableau - Tableau can help anyone see and understand their data. Connect to almost any database, drag and drop to create visualizations, and share with a click.

Here's what people are saying about Metabase. Super impressed with @metabase! We are using it internally for a dashboard and it really offers a great combination of ease of use, flexibility, and speed. Paavo Niskala (@Paavi) December 17, 2019. @metabase is the most impressive piece of software I’ve used in a long time.

时间序列,日志与设备运行数据分析选 Grafana;企业生产经营数据分析则可以选 Superset。 Metabase. Metabase 目前在 GitHub 上受欢迎程度仅次于 Superset,Metabase 也是一个完整的 BI 平台,但在设计理念上与 Superset 大不相同。. Kibana and Metabase are both open source tools. Metabase with 15.6K GitHub stars and 2.09K forks on GitHub appears to be more popular than Kibana with 12.4K GitHub stars and 4.81K GitHub forks.

In this post, I’ll show you, how to do real time runtime and application monitoring using Prometheus and Grafana. As an example, let’s consider Opendata API of ITMS2014+.

Prometheus

Our monitoring solution consists of two parts. The core of the solution is Prometheus, which is a (multi-dimensional) time series database. You can imagine it as a list of timestamped, named metrics each consisting of a set of key=value pairs representing the monitored variables. Prometheus features relatively extensive alerting options, it has its own query language and also basic means for visualising the data. For more advanced visualisation I recommend Grafana.

Prometheus, unlike most other monitoring solutions works using PULL approach. This means that each of the monitored applications exposes an HTTP endpoint exposing monitored metrics. Prometheus then periodically downloads the metrics.

Grafana

Grafana is a platform for visualizing and analyzing data. Grafana does not have its own timeseries database, it’s basically a frontend to popular data sources like Prometheus, InfluxDB, Graphite, ElasticSearch and others. Grafana allows you to create charts and dashboards and share it with others. I’ll show you that in a moment.

Publishing metrics from an application

In order for Prometheus to be able to download metrics, it is necessary to expose an HTTP endpoint from your application. When called, this HTTP endpoint should return current application metrics - we need to instrument the application. Prometheus supports two metrics encoding formats - plain text and protocol buffers. Fortunately, Prometheus provides client libraries for all major programming languages including Java, Go, Python, Ruby, Scala, C++, Erlang, Elixir, Node.js, PHP, Rust, Lisp Haskell and others.

As I wrote earlier, let’s consider ITMS2014+ Opendata API, which is an application written in Go. There is an official Prometheus Go Client Library. Embedding it is very easy and consists of only three steps.

Free microsoft office download for mac full version. The first step is to add Prometheus client library to imports:

The second step is to create an HTTP endpoint for exposing the application metrics. In this case I use Gorilla mux and Negroni HTTP middleware:

We are only interested in line 2, where we say that the /metrics endpoint will be processed by Prometheus handler, which will expose application metrics in Prometheus format. Something very similar to the following output:

In production, you would usually want some kind of access control, for example HTTP basic authentication and https:

Although we have only added three lines of code, we can now collect the application’s runtime metrics, e.g., number of active goroutines, RAM allocation, CPU usage, etc. However, we did not expose any application (domain specific) metrics.

In the third step, I’ll show you how to add custom application metrics. Let’s add some metrics that we can answer these questions:

which REST endpoints are most used by consumers?

how often?

what are the response times?

Grafana Metabase On Pc

Whenever we want to expose a metric, we need to select its type. Prometheus provides 4 types of metrics:

Counter - is a cumulative metric that represents a single numerical value that only ever goes up. A counter is typically used to count requests served, tasks completed, errors occurred, etc.

Gauge - is a metric that represents a single numerical value that can arbitrarily go up and down. Gauges are typically used for measured values like temperatures or current memory usage, but also “counts” that can go up and down, like the number of running goroutines.

Histogram - samples observations (usually things like request durations or response sizes) and counts them in configurable buckets. It also provides a sum of all observed values.

Summary - is similar to a histogram, a summary samples observations (usually things like request durations and response sizes). While it also provides a total count of observations and a sum of all observed values, it calculates configurable quantiles over a sliding time window.

In our case, we want to expose the processing time of requests for each endpoint (and their percentiles) and the number of requests per time unit. As the basis for these metrics, we’ve chosen the Histogram type. Let’s look at the code:

We’ve added a metric named http_durations_histogram_seconds and said that we wanted to expose four dimensions:

code - HTTP status code

version - Opendata API version

controller - The controller that handled the request

action - The name of the action within the controller

For the histogram type metric, you must first specify the intervals for the exposed values. In our case, the value is response duration. On line 3, we have created 36 exponentially increasing buckets, ranging from 0.0001 to 145 seconds. In case of ITMS2014+ Opendata API we can empirically say that most of the requests only last 30ms or less. The maximum value of 145 seconds is therefore large enough for our use case.

Finally, for each request, we need to record four dimensions we have defined earlier and the request duration.Here, we have two options - modify each handler to record the metrics mentioned above, or create a middleware that wraps the handler and records the metrics. Obviously, we’ve chosen the latter:

As you can see, the middleware is plugged in on line 8 and the entire middleware is roughly 20 lines long. On line 27 to 31, we fill the four dimensions and on line 32 we record the request duration in seconds.

Configuration

Since we have everything ready from the app side point of view, we just have to configure Prometheus and Grafana.

A minimum configuration for Prometheus is shown below. We are mainly interested in two settings, how often are the metrics downloaded (5s) and the metrics URL (https://opendata.itms2014.sk/metrics).

A minimal Grafana configuration:

Note: As we can see, a NON TLS port 3000 is exposed, but don’t worry there is a NGINX in front of Grafana listening on port 443, secured by Let’s Encrypt certificate.

Monitoring

Finally, we get to the point where we have everything we need. In order to create some nice charts it is necessary to:

Open a web browser and log into Grafana

Add Prometheus data source

Create dashboards

Create charts

An example of how to create a chart showing the number of HTTP requests per selected interval is shown on the following figure.

Similarly, we’ve created additional charts and placed them in two dashboards as shown on the following figures.

Summary

In this post, we have shown that the application and runtime monitoring may not be difficult at all.

Prometheus client libraries allow us to easily expose metrics from your applications, whether written in Java, Go, Ruby or Python. Prometheus even allows you to expose metrics from an offline applications (behind corporate firewalls) or batch applications (scripts, etc.). In this case, PUSH access can be used. The application then pushes metrics into a push gateway. The push gateway then exposes the metrics as described in this post.

Grafana can be used to create various charts and dashboards, that can be shared. Even static snapshots can be created. This allows you to capture an interesting moments and analyze them later.

Reports and Analytics

Powerful Enterprise Grade Reporting Engine

Elegant SQL interface for people who need a little more power

Widgets for Creating Bar Chars, Pie Charts, Line Graphs

Multiple Dashboards with different personal widgets

Create, organize, and share dashboards with others

Dashboards

Open Source

Completely Open Sources

Community Contribution Available

Simple to Use even for beginners

Install on premises or in the Cloud

Free and Simple to Use

Integrations

Integration with any Data Source in SQL

PostgreSQL, MySQL, Maria DB

Oracle, MS SQL or IBM DB2

Ready Plugins Available

Metabase Vs Grafana

Altnix Advantage

Metabase Consulting Services

Altnix provides Professional services for Consulting on Metabase products. Following items are covered:

Consulting Services for Metabase business intelligence tool

Best practices and guidelines on how to adopt the Metabase business intelligence tool

Architecture Design for Metabase

Technology Roadmap for Metabase adoption at your organization

Solution Design on using Metabase business intelligence tool

Metabase Implementation and Deployment

Altnix will implement Metabase based business intelligence and Analytics solution keeping in mind the business requirements. Implementation includes the following:

Integration with different databases and data sources

Extract Transform Load (ETL) Design

Designing Queries to be used in Metabase

Widgets and Dashboards design in Metabase

Reports Design in Metabase

Development and Design Implementation

UAT and Testing Activities

Production Implementation and Go Live

Warranty Support Period Included

Metabase Customization

Grafana Metabase On Twitter

Altnix will customize your Metabase installation so that it is a better fit for your business environment.

Creating new visualizations and dashboards as per customer needs

Creating custom reports and charts as per customer needs

Adding new scripts, plug-ins, and components if needed

Third-Party Integration

Altnix will integrate Metabase business intelligence tools with other third-party tools to meet several use cases.

Ticketing systems such as LANDesk, BMC Remedy, Zendesk, and ((OTRS)) Community Edition

ITSM Tools such as ((OTRS)) Community Edition, GLPi Network Editon, ServiceNow, and HP Service Manager

Monitoring tools such as Zabbix, Nagios, OpenNMS, and Prometheus

IT Automation Tools such as StackStorm, Ansible, and Jenkins

24x7 AMC Support Services

Altnix offers 24x7 support services on an AMC or per hour basis for new or existing installations on the Metabase Business intelligence tool. Our team of experts are available round the clock and respond to you within a predefined SLA.

Case Studies

Knute Weicke

Security Head, IT

Fellowes Inc, USA

Altnix was an instrumental partner in two phases of our Security ISO needs. The first being a comprehensive developed Service/Ticketing system for our global offices. The second being that of an Asset Management tool that ties all assets into our Ticketing systems to close a gap that we had in that category. They are strong partners in working towards a viable solution for our needs

The Altnix team was very easy to work with and resolved our needs in a timely manner. Working with Altnix, allowed us to focus on our core business while they handled the technical components to help streamline our business tools. We have found a strategic partner in Altnix

Johnnie Rucker

General Manager

Encore Global Solutions, USA

White Papers

0 notes

Link

If you are looking for Elasticsearch Consulting Services or Apache Solr Consulting services, you can contact us and we will help you choose the right technology that fits the best for your business application.

Dm us on [email protected] for a free consultation.

#Elasticsearch Development company#Apache Solr Development Services#Apache Solr Consulting Services#Apache Solr Development Company

0 notes

Text

What specific Elasticsearch consulting services does Nextbrick offer to businesses?

Nextbrick offers a comprehensive range of Elasticsearch consulting services to businesses, tailored to meet their specific needs and objectives. These services include:

Elasticsearch Implementation: Nextbrick assists businesses in designing, planning, and deploying Elasticsearch clusters from scratch. They configure the architecture, indices, and data modeling to align with the organization's use cases.

Performance Optimization: Nextbrick conducts performance assessments and fine-tunes Elasticsearch clusters to improve query response times, indexing rates, and overall system efficiency.

Scalability Planning: Nextbrick helps businesses plan for future growth by optimizing Elasticsearch clusters for scalability, ensuring they can handle increased data volumes and traffic.

Indexing and Data Modeling: Nextbrick offers expertise in designing effective data models, mapping strategies, and analyzers to optimize data indexing and improve search relevance.

Query Optimization: Nextbrick fine-tunes search queries and filtering criteria to enhance search accuracy and query performance, resulting in faster and more relevant search results.

Security and Compliance: Nextbrick ensures that Elasticsearch clusters are configured with robust security measures, including access controls, encryption, and compliance with industry regulations like GDPR or HIPAA.

Log and Data Analysis: Nextbrick assists businesses in setting up Elasticsearch for log and data analysis, enabling real-time monitoring, data visualization, and actionable insights from large datasets.

Elasticsearch Cluster Management: Nextbrick provides ongoing support for Elasticsearch clusters, including health monitoring, node management, and issue resolution to maintain optimal performance.

Custom Integrations: Nextbrick integrates Elasticsearch with other technologies and data sources, such as databases, content management systems, and machine learning platforms, to create comprehensive solutions.

Elasticsearch Training: Nextbrick offers training programs and workshops to empower organizations with the knowledge and skills needed to manage and maintain Elasticsearch environments effectively.

Migration and Upgrades: Nextbrick assists with Elasticsearch version upgrades and data migrations to ensure a smooth transition while preserving data integrity.

Consulting and Advisory Services: Nextbrick provides strategic consulting and advisory services to help businesses align Elasticsearch with their overall IT and data strategies.

Performance Audits: Nextbrick conducts in-depth performance audits to identify bottlenecks, inefficiencies, and areas for improvement in existing Elasticsearch deployments.

Disaster Recovery Planning: Nextbrick helps businesses establish robust backup and disaster recovery strategies to safeguard Elasticsearch data and ensure business continuity in the event of failures or data loss.

Geo-spatial Data Solutions: Nextbrick specializes in handling geo-spatial data within Elasticsearch, making it ideal for location-based applications and services.

Nextbrick's Elasticsearch consulting services are designed to assist businesses in leveraging Elasticsearch's capabilities to their advantage, whether it's for search, analytics, monitoring, or other data-related needs. These services are adaptable to various industries and use cases, providing tailored solutions that drive business success.

0 notes

Text

Achieve Your Business Success with Pega Cloud Computing

The organizations that seek to enable applications to scale with consistent performance and meet a quickly evolving security landscape rely only on cloud computing. For businesses, migrating customer engagement and digital process automation applications to the cloud need an architecture developed for the task. And here comes the significance of Pega Cloud services.

What are Pega Cloud Services?

Pega Cloud Services is a managed cloud that offers the tools, environments, and operational support established for the organizations. The purpose of these services is to enable businesses to provide applications and value to their organizations quicker. With the help of Pega Cloud services, clients can deploy their cloud globally on secured infrastructure to meet severe security and compliance requirements.

What You Can Find In Pega Cloud Services?

Cloud-based microservices in Pega Platform:

Pega Platform uses container technology to provide Elasticsearch as a microservice. This change shows how the Pega Platform is developing into a cloud-native application.

Development of global footprint with support for the AWS Stockholm region

Improved reliability & Streamlined Upgraded Processes

Now with the latest Pega Infinity™ release features, clients can optimize their business goals and processes. In addition to that, Pega Cloud Services infrastructure improvements drive the following advantages:

Automatically upgrades the database to Amazon RDS PostgreSQL 11.4

Clients’ global footprint expansion with support for the AWS Mumbai region

Support Cassandra 3.11.3 to enhance the system’s reliability & reduce memory footprint

Salient features Of Pega Cloud Services:

Comprehensive, global client support

Predictive Diagnostic cloud – Focus more on action not on management

Improved connectivity and networking solutions

Integrate with scalability, security and stability

Deliver apps more quickly without compromising the quality

Pega’s Happy Client Stories

Xchanging is a business process and technology services provider and integrator, owned by DXC Technology. It provides technology-enabled business services to the commercial insurance industry. They have observed the drastic changes in their business with the adoption of Pega Cloud services, such as:

Improved regulatory control

Enhanced application security

Decreased transaction settlement time from months to seconds

Enhanced client operations by 50%

The Royal Bank of Scotland (RBS) is a major retail and commercial bank in Scotland. With the implementation of Pega, RBS has experienced important performance improvements including:

5X Increase in digital lending

20% Improvement in balance retention

35% Fewer impressions (waste)

21 Channels integrated in 4 years

Crochet Technologies

Crochet Technologies is a Global Information Technology (IT) Services and Solutions company.

Crochet’s Pega competency helps streamline and automate business processes across industries. We offer end-to-end services from business consulting, development, design, and managed support in Pega space. With Crochet’s deep business acumen, world-class project delivery and leadership capabilities, we assist companies in obtaining best cloud results for keeping business data safely.

To keep in touch, follow us on our LinkedIn Page. For any business requirements reach us directly on [email protected]

0 notes